Blog Post

6.27.2019

How Self-Driving Cars Think: Navigating Double-Parked Vehicles

Share

Every day, San Franciscans drive through six-way intersections, narrow streets, steep hills, and more. While driving in the city, we check mirrors, follow the speed limit, anticipate other drivers, look for pedestrians, navigate crowded streets, and more. For many of us who have been driving for years, we do these so naturally, we don’t even think about it.

At Cruise, we’re programming hundreds of cars to consider, synthesize, and execute all these automatic human driving actions. In SF, each car encounters construction, cyclists, pedestrians, and emergency vehicles up to 46 times more frequently than in suburban environments, and each car learns how to maneuver around these aspects of the city every day.

To give you an idea of how we’re tackling these challenges, we’re introducing a “How Self-Driving Vehicles Think” series. Each post will highlight a different aspect of teaching our vehicles to drive in one of the densest urban environments. In our first edition, we’re going to discuss how our Cruise self-driving vehicles handle double-parked vehicles (DPVs).

How Cruise autonomous vehicles maneuver around double-parked vehicles

Every self-driving vehicle “thinks” about three things:

Perception: Where am I and what is happening around me?

Planning: Given what’s around me, what should I do next?

Controls: How should I go about doing what I planned?

One of the most common scenarios we encounter — that requires the sophisticated application of all three of these elements — is driving around double-parked vehicles. On average in San Francisco, the probability of encountering a double-parked vehicle is 24:1 compared to a suburban area.

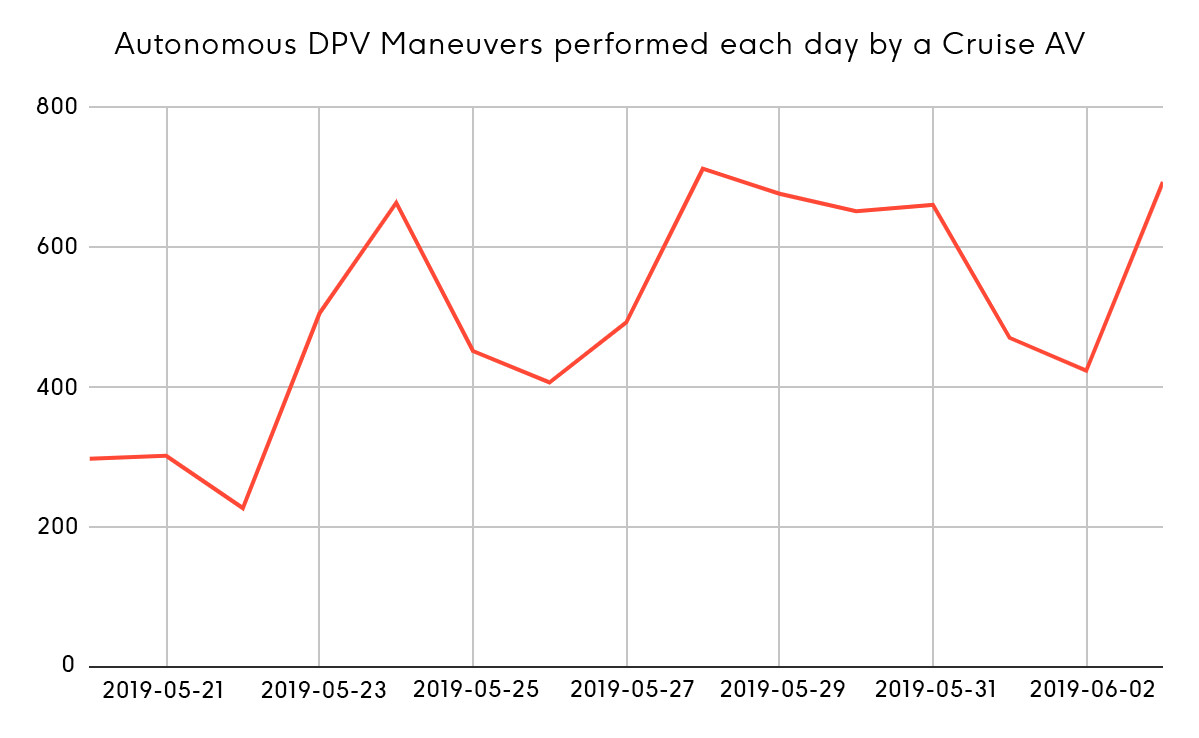

The Cruise fleet typically performs anywhere between 200 to 800 oncoming maneuvers each day!

Since double-parked vehicles are extremely common in cities, Cruise cars must be equipped to identify and navigate around them as part of the normal traffic flow. Here is how we do it.

Perception

Recognizing whether a vehicle is double-parked requires synthesizing a number of cues at once, such as:

How far the vehicle is pulled over towards the edge of the road

The appearance of brake and hazard lights

The last time we saw it move

Whether we can see around it to identify other cars or obstacles

How close we are to an intersection

We also use contextual cues like the type of vehicle (i.e. delivery trucks, who double-park frequently), construction activity, and scarcity of nearby parking.

To enable our cars to identify double-parked vehicles, we collect the same information as humans. Our perception software extracts what cars around the Cruise autonomous vehicle (AV) are doing using camera, lidar, and radar images:

Cameras provide the appearance and indicator light state for vehicles, and road features (such as safety cones or signage)

Lidars provide distance measurements

Radars provide speeds

All three sensors contribute to identifying the orientation and type of vehicle. Using advanced computer vision techniques, the AV processes the raw sensor returns to identify discrete objects: “human,” “vehicle,” “bike,” etc.

By tracking cars over time, the AV infers which maneuver the driver is making. The local map provides context for the scene, such as parking availability, the type of road, and lane boundaries.

But to make the final decision — is a car double-parked or not — the AV needs to weigh all these factors against one another. This task is perfectly suited for machine learning. The factors are all fed into a trained neural network, which outputs the probability that any given vehicle is double-parked.

In particular, we use a recurrent neural network (RNN) to solve this problem. RNNs stand out from other machine-learning implementations because they have a sense of “memory.” Each time it is rerun (as new information arrives from the sensors), the RNN includes its previous output as an input. This feedback allows it to observe each vehicle over time and accumulate confidence on whether it is double-parked or not.

Planning & Controls

Getting from A to B without hitting anything is a pretty well known problem in robotics. Comfortably getting from A to B without hitting anything is what we work on in the Planning and Controls team. Comfortable isn’t just defined by how quickly we accelerate or turn, it also means behaving like a predictable and reasonable driver. Having a car drive itself means we need our vehicles’ actions to be easily interpretable by the people around us. Easy-to-understand (i.e. human-like) behavior in this case comes from identifying DPVs and reacting to them in a timely manner.

Once we know that a vehicle in front of us is not an active participant in the flow of traffic, we can start formulating a plan to get around the vehicle. Often times, we try to lane change around or route away from the obstacle. If that is not possible or desirable, we try to generate a path that balances how long we are in an oncoming lane with our desire to get around the DPV. Every time the car plans a trajectory around a double-parked vehicle, the AV needs to consider where the obstacle is, what other drivers are doing, how to safely bypass the obstacle, and what the car can and cannot perceive.

A Cruise AV navigates around a double-parked truck in the rain, with other vehicles approaching in the oncoming lane. The AV yields right-of-way to two vehicles, which in turn are going around a double-parked vehicle in their own lane.

Every move we plan takes into account the actions of the road users around us, and how we predict they will respond to our actions. With a reference trajectory planned out, we are ready to make the AV execute a maneuver.

There are many ways to figure out the optimal actions to perform in order to execute a maneuver (for example, Linear Quadratic Control); however, we also need to be mindful of the constraints of our vehicle, such as how quickly we can turn the steering wheel or how quickly the car will respond to a given input. To figure out the optimal way to execute a trajectory given these constraints, we use Model Predictive Control (MPC) for motion planning. Under the hood, MPC algorithms use a model of how the system behaves (in this case, how we have learned the world around us will evolve and how we expect our car to react) to figure out the optimal action to take at each step.

Finally, these instructions are sent down to the controllers, which govern the movement of the car. Putting it all together, we get:

After yielding to the cyclist, we see an oncoming vehicle allowing us to complete our maneuver around the double-parked truck. It is important to recognize these situations and complete the maneuver so we support traffic flow.

San Francisco is famously known to be difficult to drive in, but we at Cruise cherish the opportunity to learn from the city and make it safer. With its mid-block crosswalks, narrow streets, construction zones, and steep hills, San Francisco��’s complex driving environment allows us to iterate and improve quickly, so we can achieve our goal of making roads safer.

Over the coming months, we look forward to sharing more “How Self-Driving Vehicles Think” highlights from our journey.

If you’re interested in joining engineers from over 100 disciplines who are tackling one of the greatest engineering challenges of our generation, join us.